Sacrificing Accuracy for Simplicity

Ric Kosiba

Feb 10, 2026

I’ve had an interesting career providing planning technologies to contact centers, and through it have worked with many amazing contact center analysts and executives. I have seen a lot of interaction data. And I am certain that this is true: every contact type, every individual call center, every contact center location is truly different.

Each center’s customers have different expectations. Each operation has different clientele with different contact patterns and preferences, and each has different reasons for calling in the first place. Each individual contact center has its own culture and nuances.

This has huge impact on how you approach modeling a contact center operation. Yet every single workforce management vendor treats contact center customers as though they are all the same. And each vendor uses a very similar and generic staffing model when developing requirements.

Your Erlang C, your Erlang A, your modified Erlang calculation has a basic assumption: Every contact center is identical, whether it’s a customer service, sales, support, chat, or emergency services operation.

It’s weird.

Is there a reason you’ve never seen a validation chart of your performance?

In all this time, I have often wondered why our industry, and our vendors, don’t ever try and prove their accuracy.

Years ago, when working for a big airline, I was under the gun to build a contact center simulation model to answer some what-ifs that were critically important to our company. My model was well put together; it used the best discrete-event simulation modeling technology, and it seemed logical and correct.

But I had both a critical due date and a validation problem. I couldn’t get the model to validate against history, and my due date was getting close. I couldn’t prove that my model was correct.

The pressure was crazy. My operational team reported to finance, and word on high was that I needed to get the model done and finish the what-if. When I nervously noted that my models weren’t tying out to actuals, I was given the order: “Just don’t show the validation!”

My college professors would be horrified.

I stuck to my guns and ended up finding the data error, getting a great validation, learning something important, and being two weeks late on my analysis. (I remember presenting this to my boss, Don Barber, and our customer service VP, Fay Beauchine, on, as my slide said, “The Occasion of my Thirtieth Birthday”).

A validation is just proof that the model you base your schedules, contact center staffing plans, and what-if analyses is accurate and mimics the operation faithfully.

I suspect that the reason you have never seen a validation of your staffing models is, as was in my case, the models just don’t validate and are not correct. I have my doubts that WFM vendors have ever tried to build one. Which begs the question, “Is our stuff even accurate?”

And here is how I know. Any time we build a new set of models for a new customer and note the terrific accuracy of the models, the very first thing we want to do is to show them off. It boosts confidence in the analysis that our system helps create, but probably truer, we are just so freaking proud of it that we want to show our friends.

Here are some things I learned while developing a validation process

First, accuracy is not just about the technique you use. It is really about the assumptions of your staffing model.

There are many modeling methods that are valid.

There is a lot that goes into such a model. The distributions of call arrivals, of customer patience, of handle time, and of staffing and adherence are important to consider. I am probably forgetting some.

History must be correct, the contact performance data and a mapping of which group answers each contact stream need to match up.

Routing rules must be considered and explicitly modeled.

And validation can be iterative. It isn’t a “Here’s how many calls we expect, the handle time average, the service goal, and BAM(!) here are your requirements!” Checking actual performance is critical.

Validation can be vexing. Once you’ve built a validation process, producing accurate validation analyses can be straightforward, but given the complexity of our operations, the exceptions can be a tad mind-bending. Being off on your data or a routing assumption can frustrate your model.

You need to validate your model on the things that matter to your analysis. A calculation that only shows staffing requirements likely contains such big simplifications that the model is meaningless. I would bet dollars to donuts that most “requirements generators” are wrong, and capacity planning systems that focus on requirements are off.

A validation on someone else’s data is beside the point. When I chat about our system, I will always show a validation graph or four. And that is cool, they are genuinely real data, but what matters most is that we get real contact center data from you and show you the results of a model we built for you.

It was shocking to me how robust these validated models are. They might last forever. Models built twenty years ago are often still accurate (but, honestly, they should be re-validated a few times a year).

The validation process, once automated, can be quick. It takes good software design, but to the end-user, it can be easy.

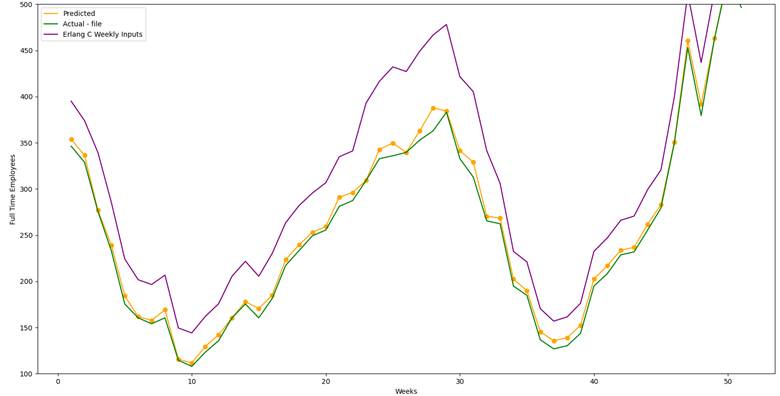

By the way, below is a validation of staff required at a big contact center. In it, we show how many people the contact center had, compared to how many agents our contact center thought they would need, compared to how many agents an Erlang C model predicts they would need. Shocking, huh?

Things I learned from building inaccurate models

I’ve made many modeling mistakes, and have assembled many processes that were wildly inaccurate. But because I did check the accuracy of my processes, we were able to catch the mistakes and were able to correct them long before the model was used to make decisions.

From my many years of experience messing up models, here is how you know your contact center capacity planning model is suspect:

It shows you staffing requirements, but not occupancy, abandons, concurrency, SL, ASA, all at the same time

You input your own abandon rate or a mean time to abandon

You can run your model without any underlying data

You input your own volume distribution

You input schedule non-adherence

The Erlang C calculation is on the vendor website

You can use whatever model you choose in your vendor’s system (like you would in a spreadsheet). The vendor doesn’t care what math is used

There is no validation using your own history

Why sacrifice accuracy? Simplicity? Ease of software maintenance? The vendor’s lack of modeling chops?

Probably.

But there is a related reason. I expect this is also true: their customers haven’t required vendors to be accurate.

As a software guy, I know the simpler you can make your code the easier it is to build and maintain. When first building a system, you take shortcuts to bring the system to market earlier (called a minimal viable product, MVP). In it you often delay building the hard stuff.

What’s scary is that the MVP you build is often sold to folks who then want you to add new features and move your focus onto something else. And the product you dreamed of building, the more robust system, never gets filled out. Simplifications end up sticking around.

(Interestingly, this time, Real Numbers took a different tack, we started with the accurate models and validations, to see if such a process could be built, before we ever started working on a UI. It took us longer, but…)

If your underlying staffing model is wrong, your planning system (or their new AI) is just confidently wrong.

Regardless of a vendor’s UI or AI interface, the point of the software is to determine staffing levels and to provide service performance what-ifs.

I expect that the industry will start developing tools to use AI agents to automate much of what WFM managers do. But, if you build an AI wrapper around an inaccurate process, well, you know. This is where smart workforce managers are sorely needed.

Generic AI will be just as inaccurate as the spreadsheet math that sits behind many of our systems. It will look great and be a sexy demo. Over time, it will also increase the speed and complexity of the questions being asked. And I suppose that the inaccuracies will get harder to miss as contradictions normal to the simple spreadsheet math start to pop up.

And given the black box nature of AI, these contradictions and obvious mistakes will be hard to explain. Which is why AI needs to be tied to an accurate and validated simulation model and used by an experienced workforce manager. We don’t need easier and faster wrongness.

Our WFM industry grew out of a desire to hunt down inefficiencies

Every workforce planning professional I have ever met has a peculiar focus. We got into WFM because we enjoy chasing down inefficiencies and fixing them. We are strangely focused on them, and it controls our ethos. It is kind of cool.

We love showing ROI, we love doing great analyses. We are leaned on to help manage big operations. We often become the “right hand man” of the operations VP (and many of us become that VP, later in our career, because we understand the big picture of our operation).

But it irks me that the core foundational math to many of the systems that help us manage these inefficiencies is at their center costly. Validating models is a basic practice that we ignore at our peril. Erlang C can’t model reality. That’s why your plan keeps surprising you.

If your capacity planning system uses spreadsheet math, there is no ROI. Accuracy is everything.

About Ric

Ric Kosiba is a founder of Real Numbers, a contact center capacity planning and modeling company. Ric can be reached at ric@realnumbers.com or (410) 562-1217.

Please know that we are *very* interested in learning about your business problems and challenges (and what you think of these articles). Want to improve that capacity plan? You can find Ric’s calendar and can schedule time with him at realnumbers.com. Follow Ric on LinkedIn! (www.linkedin.com/in/ric-kosiba/)

Ready to optimize your contact center?

Let our experts show you how Real Numbers can transform your operations.

Join the industry leaders who have already discovered the power of data-driven workforce planning.

Ready to optimize your contact center?

Let our experts show you how Real Numbers can transform your operations.

Join the industry leaders who have already discovered the power of data-driven workforce planning.

Ready to optimize your contact center?

Let our experts show you how Real Numbers can transform your operations.

Join the industry leaders who have already discovered the power of data-driven workforce planning.